Launches Gemini 2.5 Deep Think to Ultra subscribers [*Note: version of Deep Think that achieved gold-medal status at IMO]

Friday, August 1, 2025 8:19:08 AMEST

How Deep Think works: extending Gemini’s parallel “thinking time”

Just as people tackle complex problems by taking the time to explore different angles, weigh potential solutions, and refine a final answer, Deep Think pushes the frontier of thinking capabilities by using parallel thinking techniques. This approach lets Gemini generate many ideas at once and consider them simultaneously, even revising or combining different ideas over time, before arriving at the best answer.

Moreover, by extending the inference time or "thinking time," we give Gemini more time to explore different hypotheses, and arrive at creative solutions to complex problems.

We’ve also developed novel reinforcement learning techniques that encourage the model to make use of these extended reasoning paths, thus enabling Deep Think to become a better, more intuitive problem-solver over time.

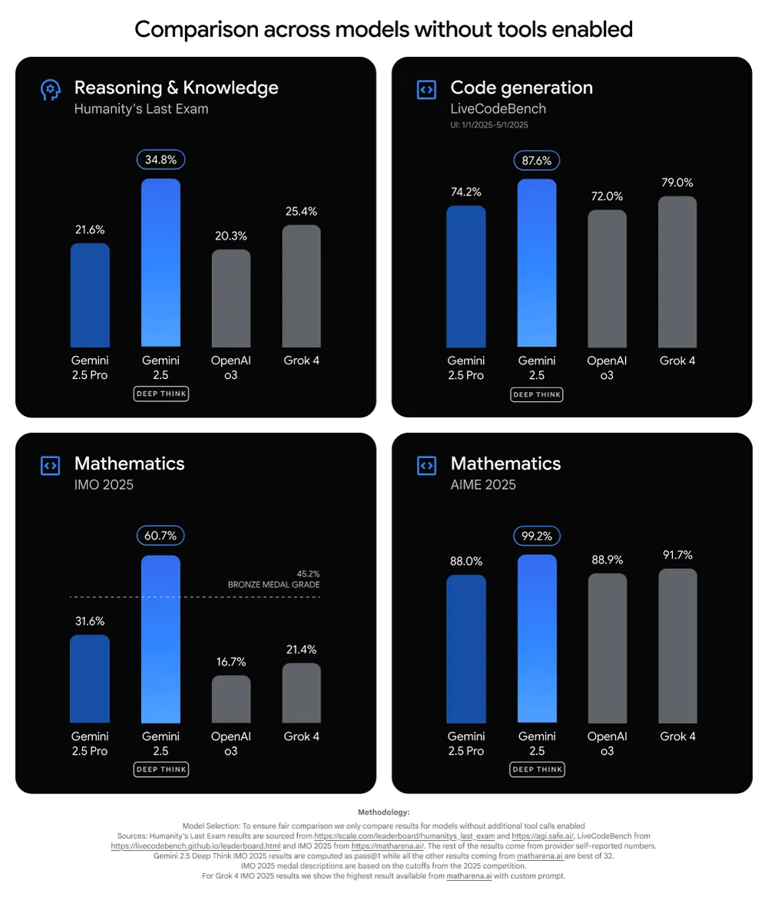

Deep Think’s performance is also reflected in challenging benchmarks that measure coding, science, knowledge and reasoning capabilities. For example, compared to other models without tool use, Gemini 2.5 Deep Think achieves state-of-the-art performance across LiveCodeBench V6, which measures competitive code performance, and Humanity’s Last Exam, a challenging benchmark that measures expertise in different domains, including science and math.

https://blog.google/products/gemini/gemini-2-5-deep-think/

~

Just as people tackle complex problems by taking the time to explore different angles, weigh potential solutions, and refine a final answer, Deep Think pushes the frontier of thinking capabilities by using parallel thinking techniques. This approach lets Gemini generate many ideas at once and consider them simultaneously, even revising or combining different ideas over time, before arriving at the best answer.

Moreover, by extending the inference time or "thinking time," we give Gemini more time to explore different hypotheses, and arrive at creative solutions to complex problems.

We’ve also developed novel reinforcement learning techniques that encourage the model to make use of these extended reasoning paths, thus enabling Deep Think to become a better, more intuitive problem-solver over time.

Deep Think’s performance is also reflected in challenging benchmarks that measure coding, science, knowledge and reasoning capabilities. For example, compared to other models without tool use, Gemini 2.5 Deep Think achieves state-of-the-art performance across LiveCodeBench V6, which measures competitive code performance, and Humanity’s Last Exam, a challenging benchmark that measures expertise in different domains, including science and math.

https://blog.google/products/gemini/gemini-2-5-deep-think/